Fake evidence, new expectations and morality: Judges talk about AI in the law

Judge Lino S. Lipinsky de Orlov shared with an audience of lawyers on Wednesday a story from last year’s judicial conference about one trial judge who suspected an attorney representing a domestic violence victim submitted a fake photo to the court of their badly beaten client.

“The judge asked: Was this AI generated? And the lawyer withdrew the exhibit,” said Lipinsky, a member of the state’s Court of Appeals. “Then that led to a discussion — how can we as trial judges determine whether an exhibit is a fake? It’s not easy.”

Lipinsky and Colorado Supreme Court Justice Maria E. Berkenkotter spoke at the Alfred A. Arraj U.S. Courthouse in Denver about their work over the past few years to identify opportunities and pitfalls for artificial intelligence in the legal profession. Nationwide, lawyers have received sanctions for using ChatGPT to file documents containing AI-invented citations. On the other hand, some judges have broadcast their experimentation with generative AI while researching tricky questions in cases.

Last month, Colorado’s Court of Appeals warned for the first time that parties may face penalties for submitting briefs containing fake citations — known as “hallucinations” — created by AI tools. Lipinsky authored that decision.

So far, a state Supreme Court subcommittee has formed to address potential changes to the rules of professional conduct that would accommodate the use of emerging AI tools. Lipinsky said the subcommittee is close to finalizing its conclusions. He indicated there will be a majority and minority report, meaning subcommittee members disagreed about the path forward.

There will also be a recommendation that the Supreme Court create a new committee focused on technology, Lipinsky added.

“I’m not gonna talk a lot about what constitutes a violation of any particular rule of professional conduct here in Colorado,” said Berkenkotter, given that her court has the final say over disciplinary appeals. She cautioned attorneys that while AI can create problems, artificial intelligence is present in many mundane aspects of life, from movie recommendations Netflix gives its users to curated playlists on Spotify.

Colorado Supreme Court Justice Maria E. Berkenkotter speaks to students at Pine Creek High School in Colorado Springs on Thursday, Nov. 17, 2022. (The Gazette, Parker Seibold)

Lipinsky emphasized that existing tools for lawyers, including those that display relevant court cases in response to a search, are AI-powered. For courts that have adopted prohibitions or rules governing AI, “I think some of those judicial officers, with all due respect, don’t understand that we have been using AI as a profession for years.”

U.S. District Court Judge S. Kato Crews, who is the newest member of the district court’s bench, became the first to adopt a rule for his courtroom requiring lawyers to confirm in their filings whether they used any generative AI tools to write their documents.

Earlier this month, Crews told Colorado Politics no one has attested so far to using AI, although he ordered some lawyers to refile their submissions because they neglected to provide the certification. Crews also recently reworded his protocols to clarify his intent behind the rule — which is to avoid false citations and encourage lawyers to check the output of any AI tools they use.

“I did amend that section of my Standing Order in December 2024 to (hopefully) make more clear the reason why I require the certification and to clarify that I’m not opposed to its use,” he said in an email.

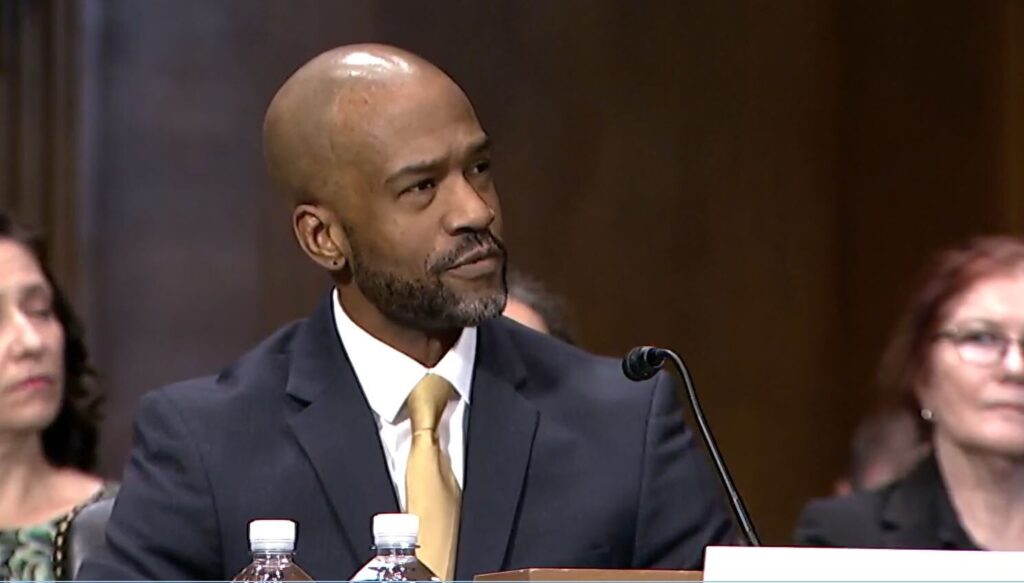

U.S. Magistrate Judge S. Kato Crews testifies at his confirmation hearing to be a district court judge on March 22, 2023.

A similar effort could be underway more broadly in Colorado. On Friday, the Supreme Court’s civil rules committee will consider a suggestion from Denver Probate Court Presiding Judge Elizabeth D. Leith to add an AI-certificiation requirement resembling Crews’ for civil litigants in state courts.

During his presentation, Lipinsky said courts across the country are cracking down on AI use in other scenarios. He referenced a Minnesota federal judge’s order from Jan. 10 rebuking a witness — Jeff Hancock, a Stanford University expert on deception with technology — for submitting written testimony containing false, AI-generated citations.

“The irony. Professor Hancock, a credentialed expert on the dangers of AI and misinformation, has fallen victim to the siren call of relying too heavily on AI — in a case that revolves around the dangers of AI, no less,” wrote U.S. District Court Judge Laura M. Provinzino. In such circumstances, she continued, the civil rules “may now require attorneys to ask their witnesses whether they have used AI in drafting their declarations and what they have done to verify any AI-generated content.”

“Pretty broad,” Lipinsky said of Provinzino’s conclusion. “As far as I know, this is the first and only decision — at least from a federal court — that expands the (lawyer’s) obligation to the citations in your expert’s filings.”

Colorado Court of Appeals Judge Lino S. Lipinsky de Orlov

Attorney Katayoun Donnelly, who also participated in the discussion because of her own work on AI and the law, cautioned that the focus has largely been on the incorrect outputs that artificial intelligence can generate. However, there is another problem, she said: AI-generated responses are coming from an entity that has no morality, judgment or understanding of social consequences.

“When we talk about our assistants, whether they’re judicial assistants, whether they’re paraprofessionals, whether they’re paralegals — we rely on their judgments as human beings,” Donnelly said. But “this thing you are using does not have any of those capabilities.”

On the other hand, Lipinsky wondered if it might someday be problematic if a lawyer opted against using AI and chose to rely on human labor for a time-intensive task.

“If you could conduct research, brainstorm on a response to a motion in 90 seconds” using AI, he asked, “can you argue to a court when you seek to recover fees, ‘It was reasonable for my first-year associate to spend 10 hours researching those issues?'”

The discussion was sponsored by the Faculty of Federal Advocates.