Appeals court warns lawyers, litigants: You will get in trouble for citing AI-invented cases

Colorado’s second-highest court put attorneys and litigants on notice for the first time on Thursday that they will face consequences if they use artificial intelligence to submit filings with fake citations.

A three-judge panel for the Court of Appeals declined to sanction a self-represented plaintiff after he acknowledged and apologized for his mistake. At the same time, Judge Lino S. Lipinsky de Orlov warned that the court may not forgive a person’s failure to monitor the output of generative AI programs in the future.

“Reliance on a GAI tool not trained with legal authorities can ‘lead both unwitting lawyers and nonlawyers astray,'” he wrote in a Dec. 26 opinion. “For these reasons, individuals using the current generation of general-purpose GAI tools to assist with legal research and drafting must be aware of the tools’ propensity to generate outputs containing fictitious legal authorities and must ensure that such fictitious citations do not appear in any court filing.”

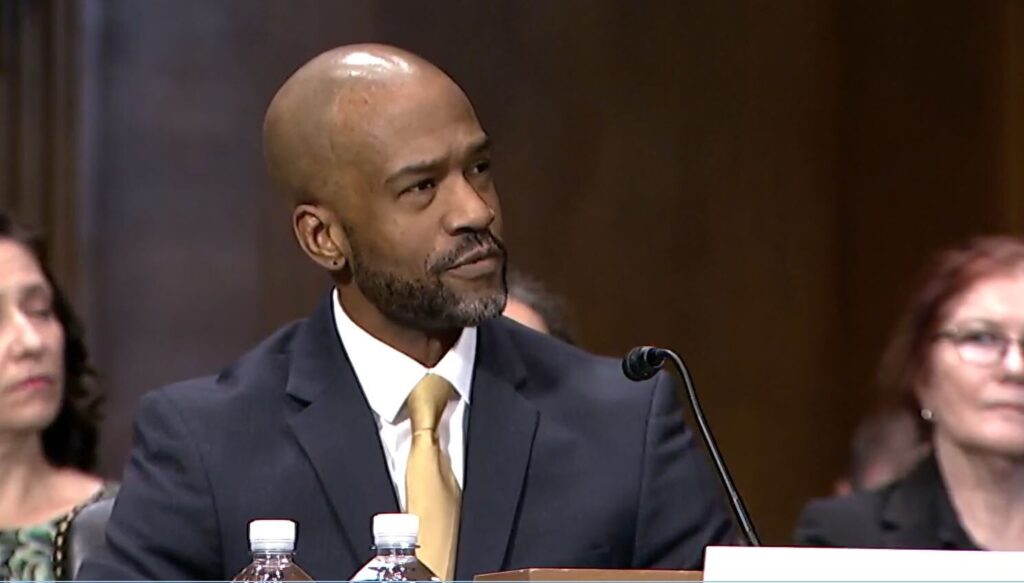

Colorado Court of Appeals Judge Lino S. Lipinsky de Orlov

As the public has gained broader access to generative AI tools like ChatGPT, courts have begun to police legal filings for “hallucinations” — meaning quotes or citations to cases the tool has invented on its own. Last year, Colorado’s presiding disciplinary judge imposed the first professional sanction on an attorney for using artificial intelligence to generate fake case citations in a legal brief and then lying about it.

One federal judge is even requiring litigants and lawyers to state whether they have used AI to prepare their legal filings and, if so, whether they have checked the accuracy of the output.

Outside the courtroom, Colorado is one of the states actively exploring how to integrate AI into the legal profession. This March, the Supreme Court’s Advisory Committee on the Practice of Law formed a subcommittee to determine if rule changes are necessary to accommodate emerging artificial intelligence-powered legal tools.

In addition, Lipinsky is one of a handful of judges in Colorado who has recently developed an expertise in the use of AI in the legal profession, alongside Supreme Court Justice Maria E. Berkenkotter and U.S. Magistrate Judge Maritza Dominguez Braswell.

Earlier this year, Berkenkotter and Lipinsky published an article arguing for the reexamination of several rules governing the legal profession. They urged lawyers and judges to think critically about how generative AI could be safely integrated into the practice of law, and even suggested attorneys might better serve their clients by using AI to perform simple or repetitive tasks.

“Should a judge alert self-represented litigants to the availability, benefits, and risks of generative AI resources?” Berkenkotter and Lipinsky wrote. “Similarly, if lawyers representing clients are using generative AI to create initial drafts of pleadings and other court filings, should or must a judge allow an unrepresented litigant to do the same?”

Colorado Supreme Court Justice Maria E. Berkenkotter speaks to students at Pine Creek High School in Colorado Springs on Thursday, Nov. 17, 2022. (The Gazette, Parker Seibold)

In the case before the Court of Appeals, Alim Al-Hamim challenged an Arapahoe County judge’s order dismissing his lawsuit against his landlord. Representing himself, Al-Hamim submitted his brief to the appellate court in June.

Nearly four months later, the appellate panel advised the parties it was “unable to locate” eight court cases Al-Hamim had cited. It directed Al-Hamim to provide the cases to the panel within 14 days.

“If the subject cases are fictitious,” the panel continued, “the court further ORDERS appellant to show cause why he should not be sanctioned.”

As for the defendants, Al-Hamim’s landlord, the panel ordered them to explain why they had neglected to tell the Court of Appeals about the fake cases.

In Al-Hamim’s response, he acknowledged he had cited cases that did not exist and, as a non-attorney, had resorted to AI to help him file his appeal on time.

“He apologizes profusely and offers in explanation that his homeless condition has severely hampered his ability to access adequate legal counsel and materials, and he has had to rely upon Artificial Intelligence,” Al-Hamim wrote.

The defendants added that Al-Hamim’s fake case citations did not appear relevant to his appeal, “and thus were not cite-checked.”

Given the circumstances and the novelty of the problem, Lipinsky wrote, it would be “disproportionate” to penalize Al-Hamim. He pointed to other decisions of state and federal courts in the past two years taking a similar approach to first-time offenses.

Although the opinion was focused on the Court of Appeals and on AI programs that are not specifically trained on legal content, the panel left open the door for other acceptable uses of generative AI.

“In 2023 and 2024, various companies introduced GAI tools trained using legal authorities. Those legal GAI tools are not implicated in this appeal, and we offer no opinion on their ability to provide accurate responses to queries concerning legal issues,” Lipinsky added.

The case is Al-Hamim v. Star Hearthstone, LLC et al.